Kubernetes

orchestrator for containers so you can better manage and scale your applications

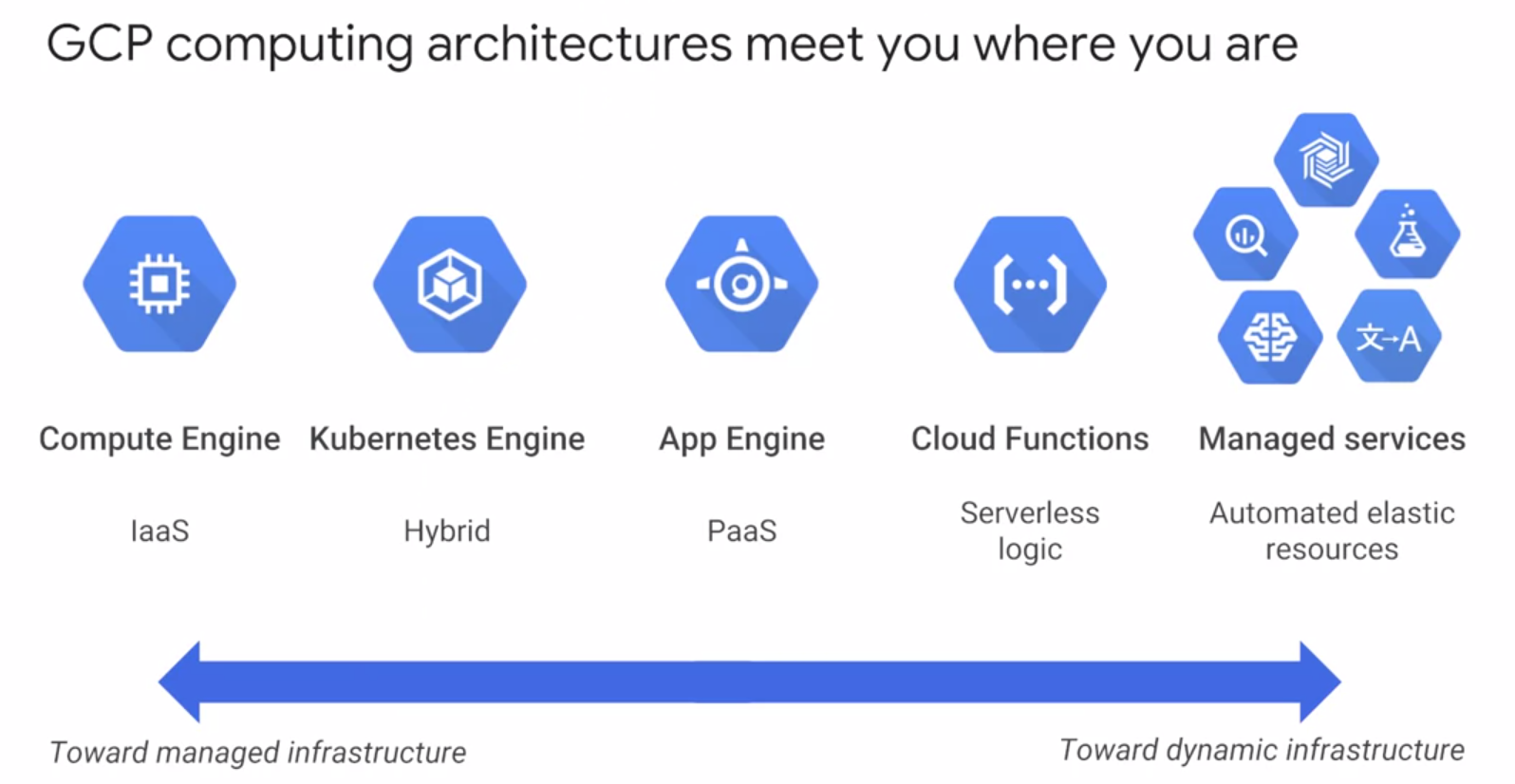

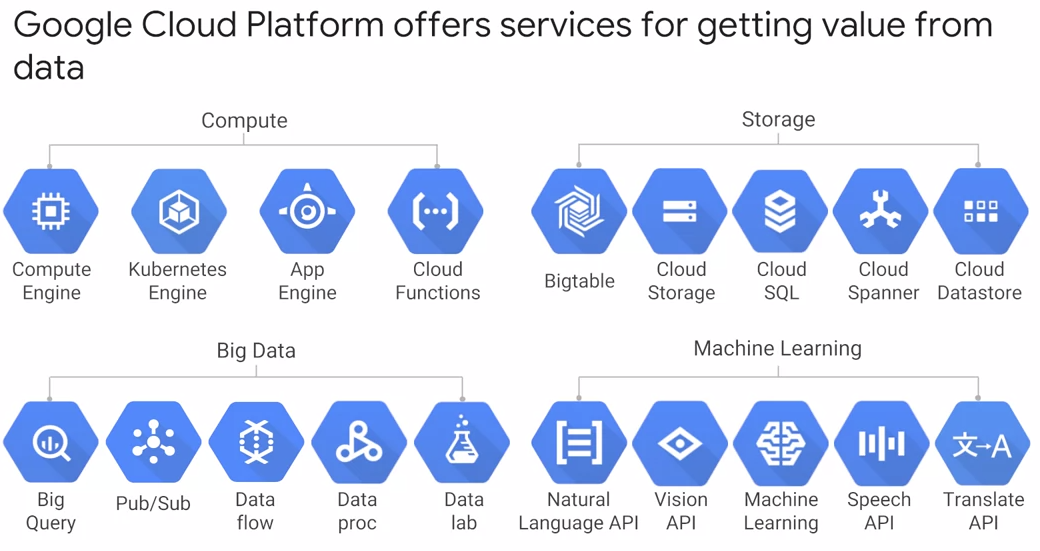

Kubernetes Engine

Kubernetes as a managed service in the cloud.

You can create Kubernetes Cluster with Kubernetes Engine

Cluster

is a set of master components that control the system as a whole and a set of nodes that run containers

In Kubernetes, a node represents a computing instance

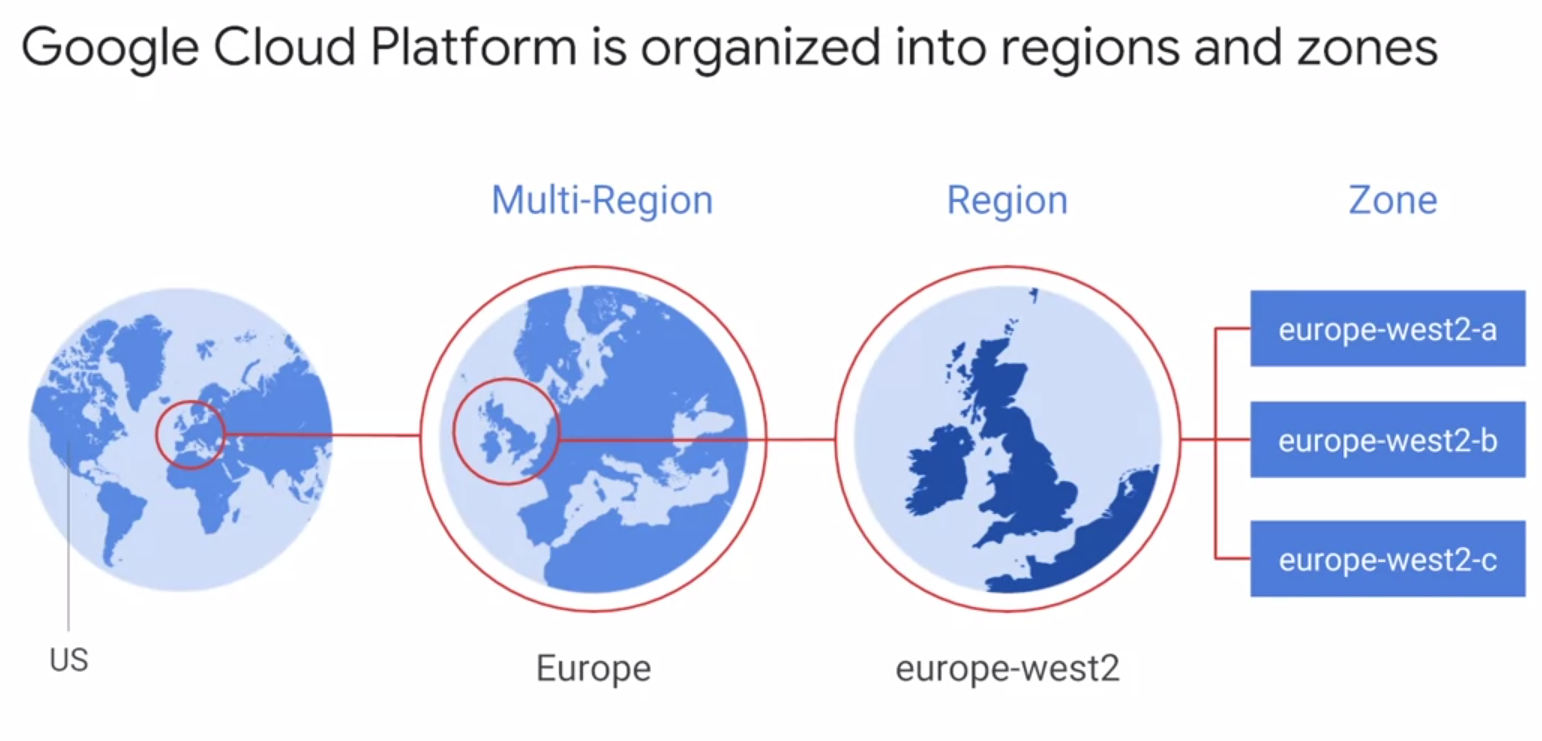

In GCP, a node is VM running in Compute Engine

Pod

the smallest deployable unit in Kubernetes. It has 1 container often, but it could have multiple containers, where the containers

will share the networking and have the same disk storage volume

Deployment

a deployment represents a group a replicas of the same pod. It keeps your pods running even if a node fails

Service

is a fundamental way Kubernetes represents load balancing. It has a public IP so the external can access the cluster

In GKE, this kind of load balancer is a network load balancer

Anthos

Hybrid and Multi-Cloud distributed systems and service management from Google. It rests on Kubernetes and Kubernetes Engine deployed on-premise