Big Data

Serverless means you don’t have to worry about provisioning compute instances to run your jobs. the services are fully managed.

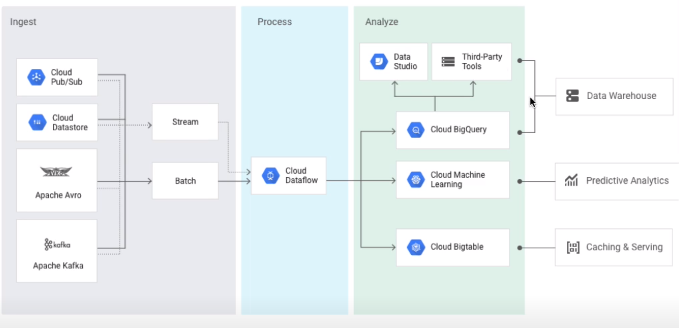

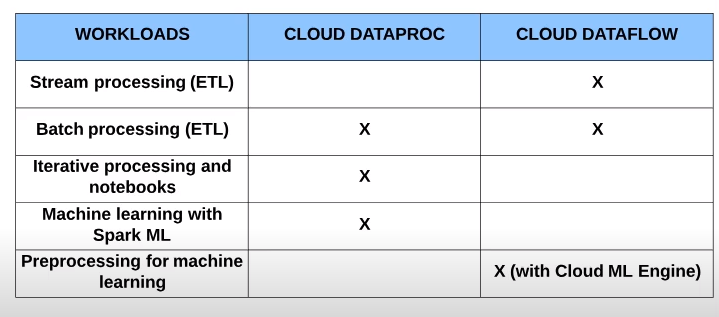

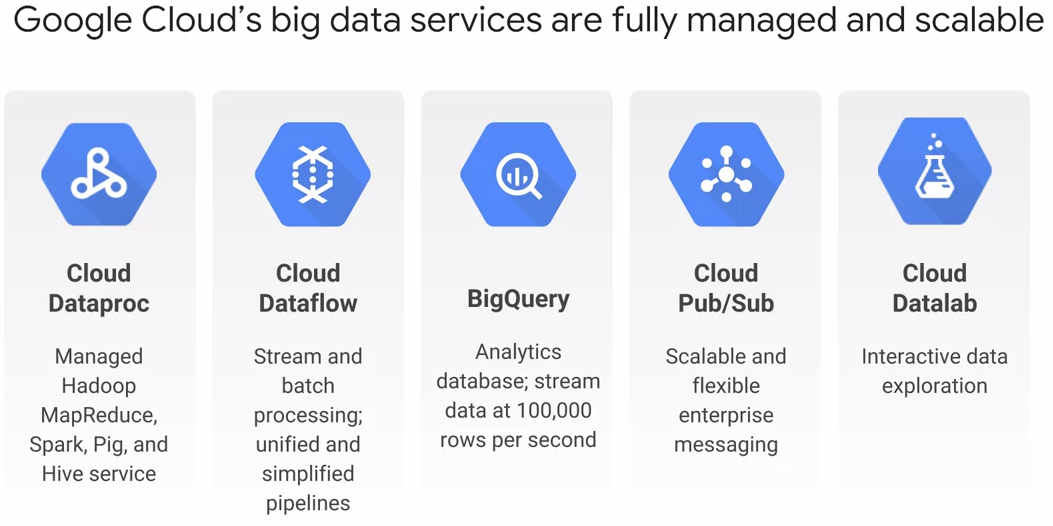

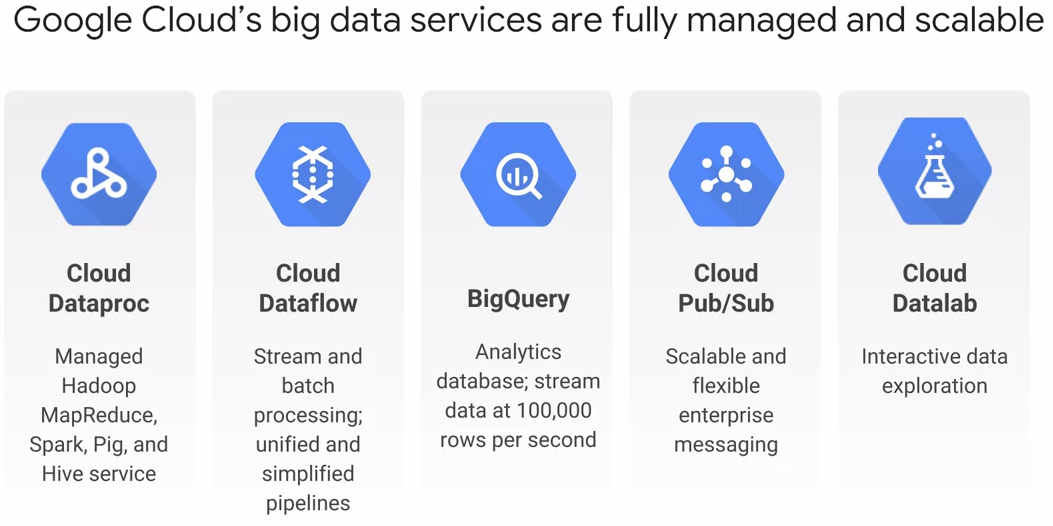

Cloud Dataproc

is managed Hadoop

- Fast, easy, managed way to run Hadoop, Spark/Hive/Pig on GCP

- Create clusters in 90 seconds

- Scale clusters up and down even when jobs are running

- Easily migrate on-premises Hadoop jobs to the cloud

- Save money with preemptible instances

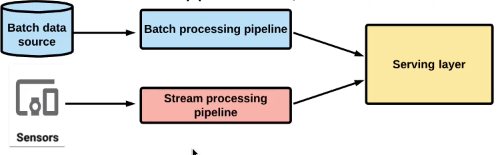

Cloud Dataflow

is managed data pipelines

- Processes data using Compute Engine

- Clusters are sized for you

- Automated scaling

- Write code for batch and streaming

Why use Cloud Dataflow?

- ETL

- Data analytics: batch or streaming

- Orchestration: create pipelines that coordinate services, including external services

- Integrates with GCP services

Big Query

is managed data warehouse

- It provides near real-time interactive analysis of massive dataset using SQL

- No cluster maintenance

- Compute and Storage are separated with a terabit network in between

- You only pay for storage and processing used

- Automatic discount for long-term data storage(when data reaches 90 days in BigQuery, google drops the price of storage)

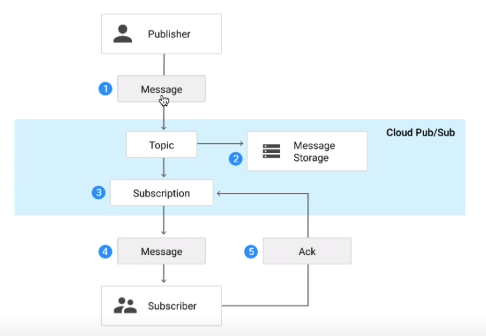

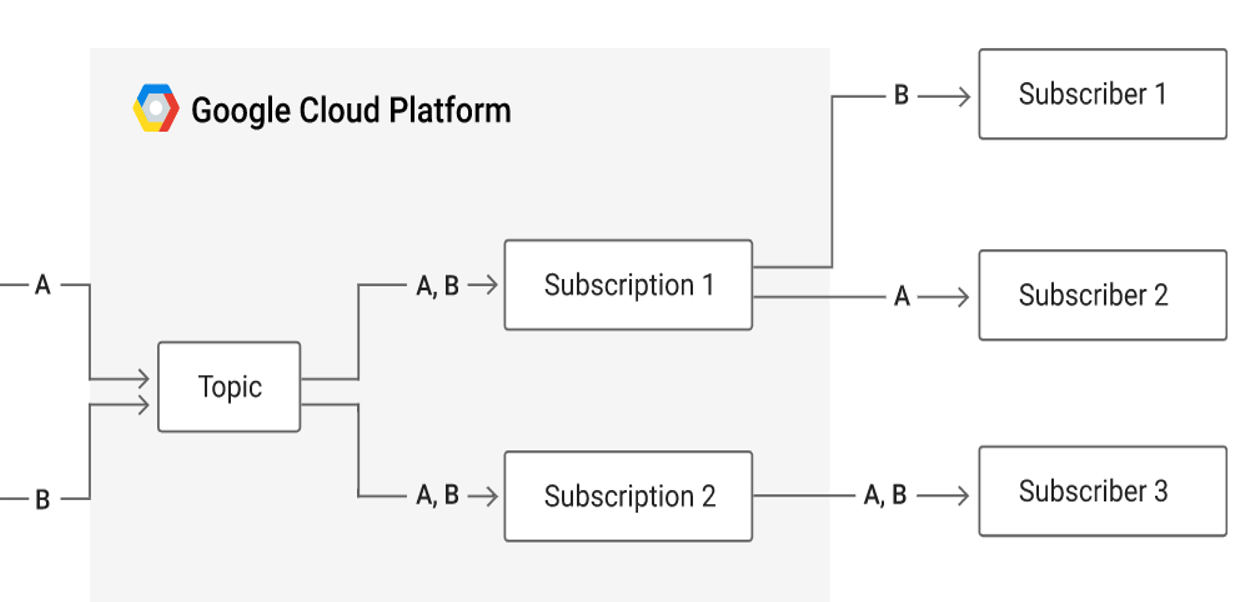

Cloud Pub/Sub

is scalable, reliable messaging

- Supports many-to-many asynchronous messaging

- Push/pull to topics

- Support for offline consumers

- At least once delivery policy

Cloud Datalab

interactive data exploration(Notebook)

Built on Jupyter(formerly IPython)

Easily deploy models to BigQuery. You can visualize data with Google Charts or map plot line

- TensorFlow

- Cloud ML

- Machine Learning APIs

Why use CLoud Machine Learning Platform?

- Classification and regression

- Recommendation

- Anomaly detection

- Image and video analytics

- Text analytics

CLoud Vision API

- Gain insight from images

- Detect inappropriate content

- Analyze sentiment

- Extract text

Cloud Natural Language API

- can return text in real time

- Highly accurate, even in noisy environments

- Access from any device

Cloud Translation API

- Translate strings

- Programmatically detect a document’s language

- Support for dozen’s languages

Cloud Video Intelligence API

- Annotate the contents of video

- Detect scene changes

- Flag inappropriate content

- Support for a variety of video formats