Dataproc

- Hadoop, Spark, Hive, Pig

- Lift and shift to GCP

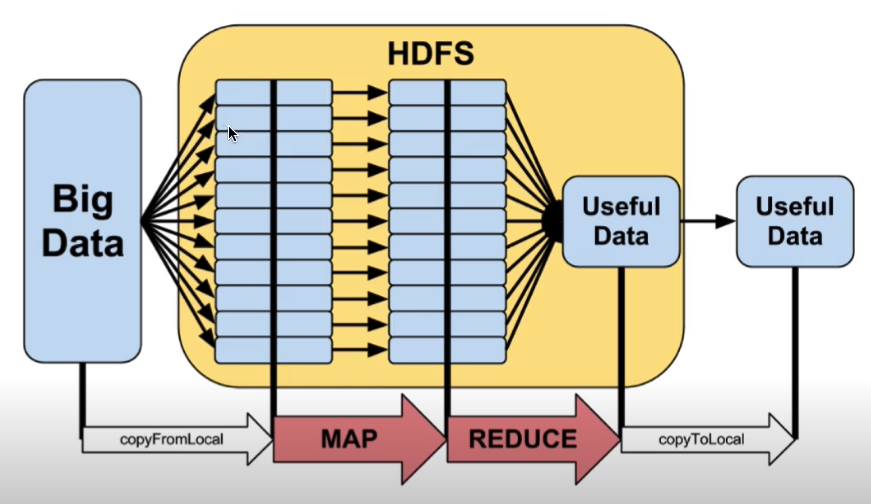

Map Reduce

Converting from HDFS to Google Cloud Storage

- Copy data to GCS

- Install connector or copy manually

- Update file prefix in scripts

- From hdfs:// to gs://

- Use Dataproc and run against/output to GCS

Dataproc performance optimization

- Keep your data close to your cluster

- Place Dataproc cluster in same region as storage bucket

- Larger persistent disk = better performance

- Using SSD over HDD

- Allocate more VMs

- Use preemptible VM to save on costs