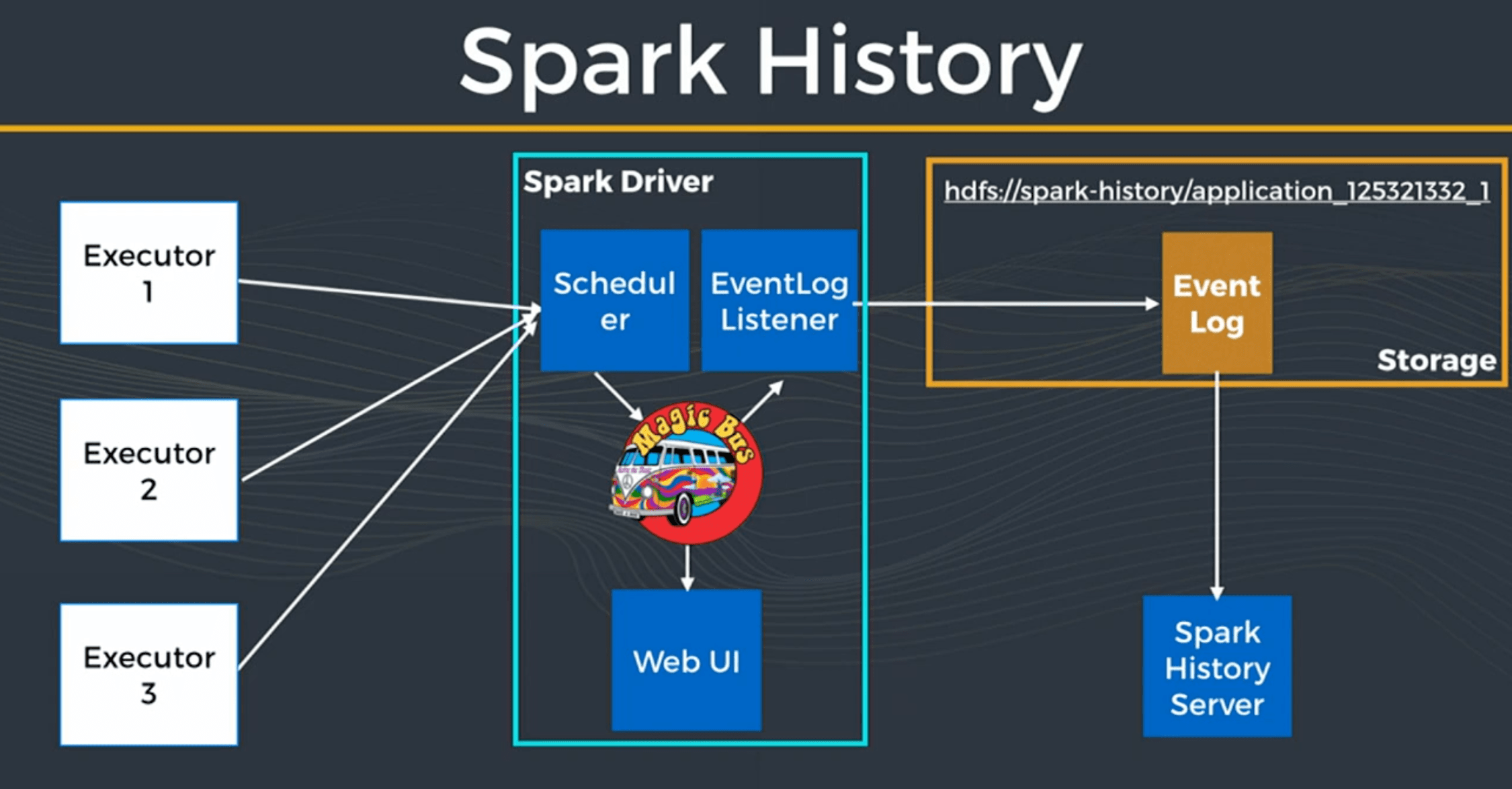

When running spark job, driver collects logs from different executors and send events via an event bus to Web UI and to EventLog Listener simultaneously.

EventLog Listener send events to a some directory for example in hdfs to store the events, and then spark history server will expose these events in its interface.

We could define our custom EventLog Listener, there are several listeners already developed and we can use them directly, here I will prent 3 examples.

sparklens

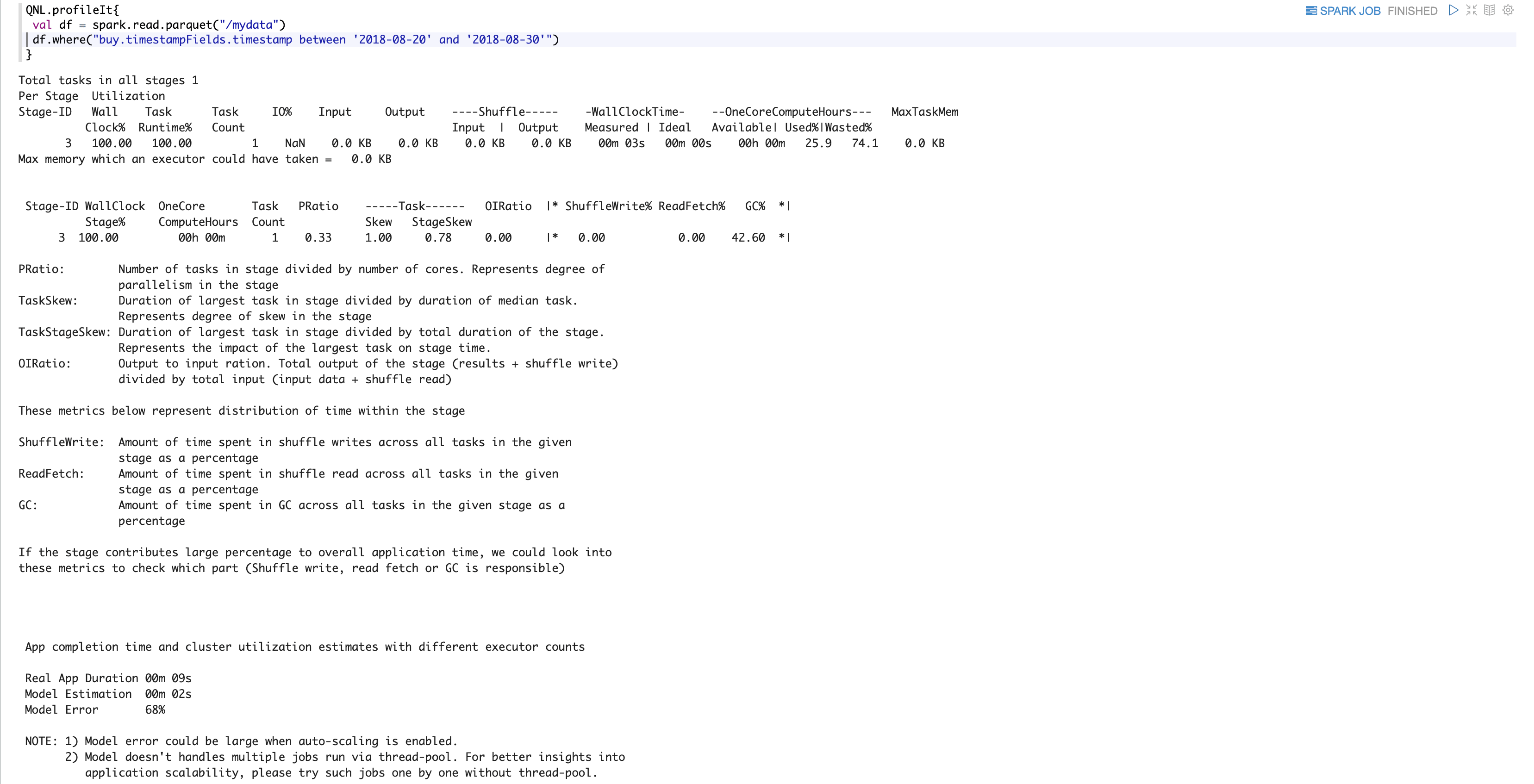

Sparklens is a profiling tool for Spark with built-in Spark Scheduler simulator, it reports

Estimated completion time and estimated cluster utilisation with different number of executors

Job/Stage timeline which shows how the parallel stages were scheduled within a job. This makes it easy to visualise the DAG with stage dependencies at the job level.

Here is a screen capture of sparklens reporting ( in my example, I added sparklens jar in the classpath of zepplin, and import sparklens package, and then I can use sparklens directly)

import package

1 | import com.qubole.sparklens.QuboleNotebookListener |

sparklint

Sparklint is a profiling tool for Spark with advance metrics and better visualization about your spark application’s resource utilization. It helps you find out where the bottle neck are

We can use it in the application code, or we can also use it to analyse event logs, at the moment when I write this blog, the actual version of sparklint is 1.0.13, and we can’t analyse event logs of history server yet if it is compressed in the configuration.

But we can decompress it to json file and then we can work on it.

here is a screen capture of sparklint

sparkMeasure

sparkMeasure is a tool for performance troubleshooting of Apache Spark workloads, It simplifies the collection and analysis of Spark performance metrics.

It is a tool for multiple uses: instrument interactive (notebooks) and batch workloads